Nodes are the essential building blocks in the Advanced interface to the Poser Material Room. They are the graphical representation of mathematical function calls, that is: calculation procedures which turn parameters (inputs) to a result (output).

Intermediate

In a mathematical way (rendering is all about endless computations, isn’t it) the PoserSurface definition can be read as

PoserSurface =

(Diffuse + Alternate_Diffuse) * (1-Transparency) +

(Specular + Alternate_Specular) +

(Ambient + Translucence) +

Reflection + Refraction

while Bump and Displacement act as special modifiers (and Transparency does have various side effects when reflection and refraction are applying raytracing).

A component of the PoserSurface can be read as

Diffuse =

(Diffuse_Color * DiffuseColor-input) *

(Diffuse_Value * DiffuseValue-input)

where each “input” refers to the result of any kind of node having its output connected to it. When there is no node attached, the input acts as a 1.0, or: neutral in the multiplication.

Each node offers one output which can be connected to (serve as input for) one or more (!) input connectors. Each input connector can have at most one output connected to it. If I want a combination of nodes attached to an input socket, I need a construction node to define the combining math myself.

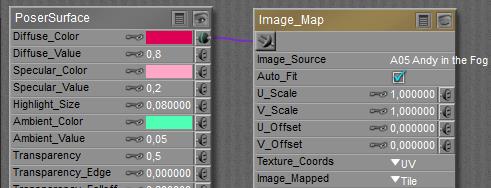

As the example above reveals, a node (like Image_Map) offers inputs or parameters of various nature. Some of them are ‘autonomous’, like the Image_source involved or the Auto-fit option. These cannot be driven by other nodes any more. But other inputs, like U_Scale, can offer a dial-value filtering of any additional results from other nodes connected to it.

Thus far, all node inputs and outputs are visible in the interface, and can be addressed explicitly. But be aware that a lot of nodes also have some additional interactions with the Poser scene or system.

- The root node, PoserSurface, looks like a regular node with inputs but its output slot is “missing”. It sends its results to the renderer instead.

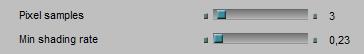

- Actually, things work the other way around: for each surface element the renderer needs some results for, the PoserSurface function is called for that surface-material definition. And that function calls the other functions according to the nodes attached to its inputs, which may call functions according to the nodes attached to their inputs and so on. In other words: PoserSurface is not pushing results into the renderer but the renderer is pulling results from PoserSurface. Complex node trees then make large stacks of function calls, and the calls are made for each surface element in the rendering process. High numbers for pixel samples and/or low values for shading rate in the Render Settings increase the number of evaluations in the renderer, and hence the amount of PoserSurface calls as well.

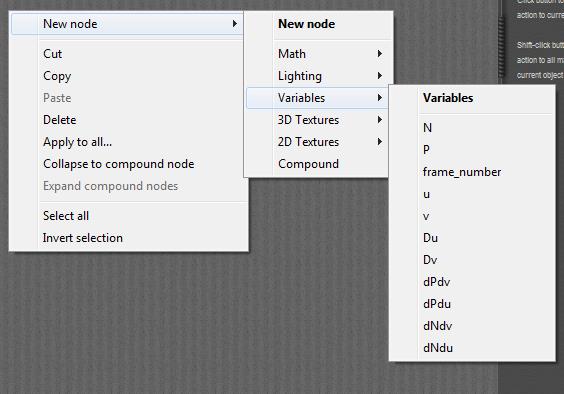

- From all that, the coordinates of that surface spot, in space and time, are available to all node-functions called. U,V,W with respect to the surface, X,Y,Z with respect to scene space, Framenumber with respect to time, and some more as referred to in the Variables nodes group:

- Next to the ‘backdoor’ communications with renderer and scene place and time, the nodes are communicating with the lights.

o The ones in the Lighting > Diffuse group (diffuse, clay, …) require any diffuse lighting from direct or indirect sources to produce their result. No such lighting, no output (0, black, …).

o The ones in the Lighting > Specular group (specular, Blinn, …) require any specular light, and I need a direct infinite, point- or spotlight to produce that. No specular light on that spot on the surface, then no output. IBL lamps and any light from objects (IDL, ambient, reflection, …) are considered diffuse, even highlights from object surfaces produce diffuse light under IDL conditions and are not considered specular themselves.

o The ones in the Lighting > Special group (skin, velvet, …) serve both purposes, they offer a response to diffuse light as well as to specular light, and even can combine that with some autonomous ambient effect. Some of them require the Subsurface scattering option in Render Settings to be switched on in order to produce any output at all.

o The ones in the Lighting > Raytrace group (reflect, refract, …) require light from surrounding objects, and do not work on the light from direct sources. When there are no such objects in front (reflect) or behind (refract) the surface at hand, they can’t do their job and will produce their default ‘background’ response.

For example,

- I’ve got an object which has some response to diffuse or specular light, and produces an ambient glow too. And I’ve got no lights at all in the scene. Then only the ambient glow will show, as the Diffuse and Specular components have nothing to respond to, and the Reflection and Refraction lack any surrounding objects to work with.

- Now I connect the diffuse node to the Ambient_Color slot in an attempt to get some intensity distribution into the glow. What do I get? It kills the glow. The diffuse node will not receive any diffuse light from any source, therefore it produces a null response, which is input to Ambient_Color which will therefore produce black as well.

- So… nodes like those require light onto the surface and produce a response from that, they are not producing light distributions as such for output. No light, null response, black output.