Nodes are the essential building blocks in the Advanced interface to the Poser Material Room. They are the graphical representation of mathematical function calls, that is: calculation procedures which turn parameters (inputs) to a result (output).

Intermediate

In order to show some kind of reflections in the render, the scene needs an environment to reflect. This might require the build of a complete scene behind the camera, which won’t show except for its reflections. As this can be quite tedious and far from cost-effective, one can use image maps instead. For just mimicking blurred reflections of far-away objects, skies and landscapes any simple projection (mapping) of an appropriate image onto the reflection component will do. This however should be considered unsatisfactory when either sharper reflection has to be considered.

Now, say, my scene is under a sky dome and on a ground floor, and an image is mapped to these environmental objects. In the scene, a single fully reflective object is present. Then: how would the dome and ground be reflected from that object (assuming there are no other things around to reflect)? Rendering full, crisp raytraced reflections from a complex shaped object can be time consuming.

This is when applying a “spherical environmental reflection map” becomes useful. Just plug the Sphere Map (the only node in the Lighting > Environment Map group) into the PoserSurface Reflection_Color (or Alternate_Diffuse) slot, and the image that might have been used for the sky dome into the Sphere-map Color slot. Now I’ll see the same reflections, but I don’t need the dome, and neither I need any raytracing for the reflections.

I might want to Color filter the image though, and I might want to Rotate it to match any sky dome actually used.

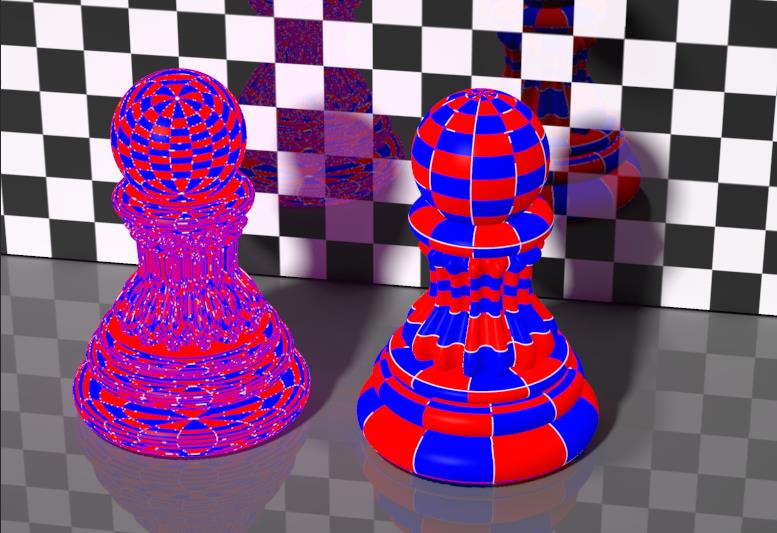

Now, is this any different from a regular mapping of the image around the object? Sure it is, have a look at this:

The right pawn has the tiling image mapped onto the object surface, in the Reflection or (alternate) diffuse component of the PoserSurface. The left pawn shows environmental spherical mapping, and looks like a mess at first sight. Unless I realize that the tiling image is mapped onto a (virtual) sky dome surrounding the scene, like the way the tiles are mapped onto the head ball of the right pawn. The ‘converging point’ where the tiles come together is not on the object itself, but somewhere straight above all objects, high in the sky.

Then such a colored sky dome is reflected by the left pawn, towards the camera. And that is what the spherical mapped texture is showing, in a correct way. Where image mapping usually either depends on the shape of the object (UV mapping) or the position of the object in the scene (XYZ mapping), this Environmental Spherical Reflection Mapping depends on the position of the object under the dome, relative to the camera. When either the object moves, or the camera moves (or both), the mapping will get adjusted.

Do we need it?

The obvious advantage is render speed, the obvious disadvantage is: it does not reflect any objects in the scene, let alone portions of the same object, since these are not in the image used. So, for stills of filled scenes rendered on modern, fast PC’s deploying IDL illumination and other raytracing demanding approaches, the use of the regular Reflect (or even Fresnel) node might be a better way.

But for those “shiny car on an empty road” advert-like animations, deploying this environmental spherical reflection mapping might be a game winner. And it might serve well in test runs too.