A few of them do, and here they are:

Intermediate

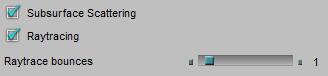

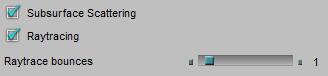

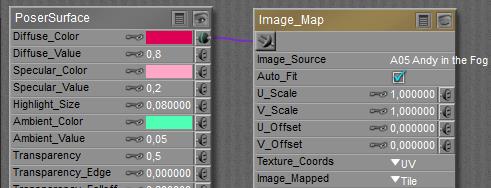

Switching OFF the Subsurface Scattering option (Poser 8 / Poser Pro and up) will disable the nodes

to save render time at testing.

Switching OFF the Raytrace option disables IDL lighting, as well as the nodes from the Lighting > Raytrace group:

again, to save render time at testing. And, by the way, my lights can’t have raytraced shadows either.

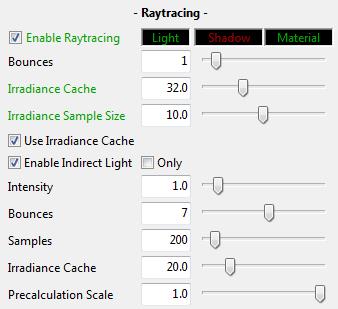

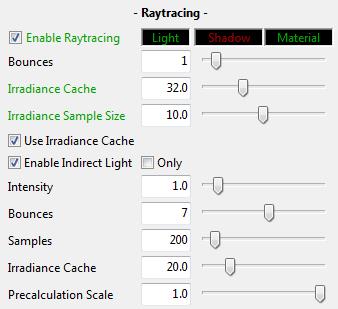

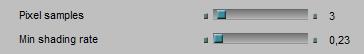

The Raytrace Bounces slider (from 0 to 12) does affect IDL lighting as well as raytracing in reflections and the like. Each surface passed or bounced at, counts for one, so passing a refractive object takes two. Rays gradually die a bit when bouncing, and when the limit as set is met, the ray gets killed anyway. This mainly affects internal reflections when reflectivity is combined with transparency.

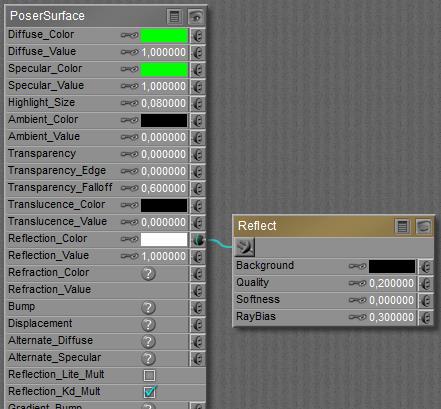

Reflection

Reducing Raytrace Bounces might result in pixels in the render which won’t receive a ray of light, and remain dark. Or at least the reflection of the object is discontinued somewhat. In other words: incomplete spots in the render, artefacts. The higher the slider is set, the less is the risk that those occur. And when the rays only need a few bounces anyway, then a high value won’t make a difference and no killing takes place. The slider sets a max value.

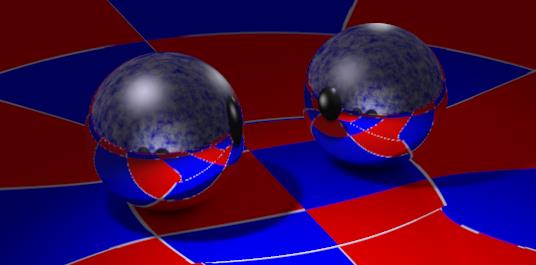

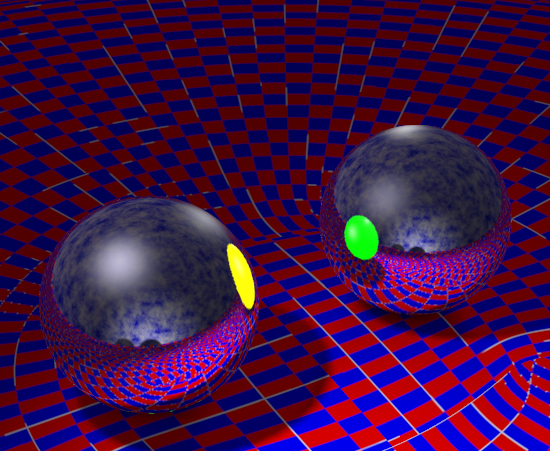

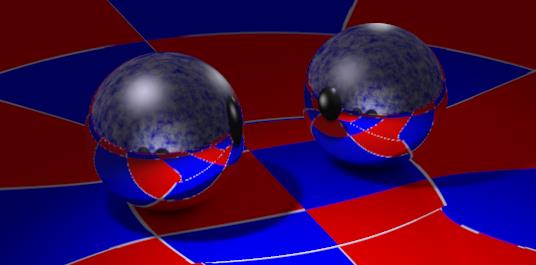

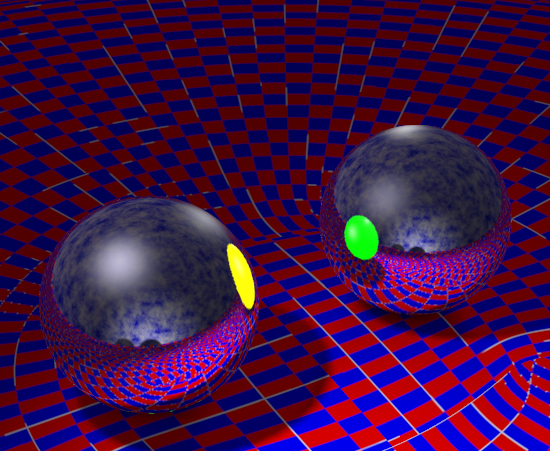

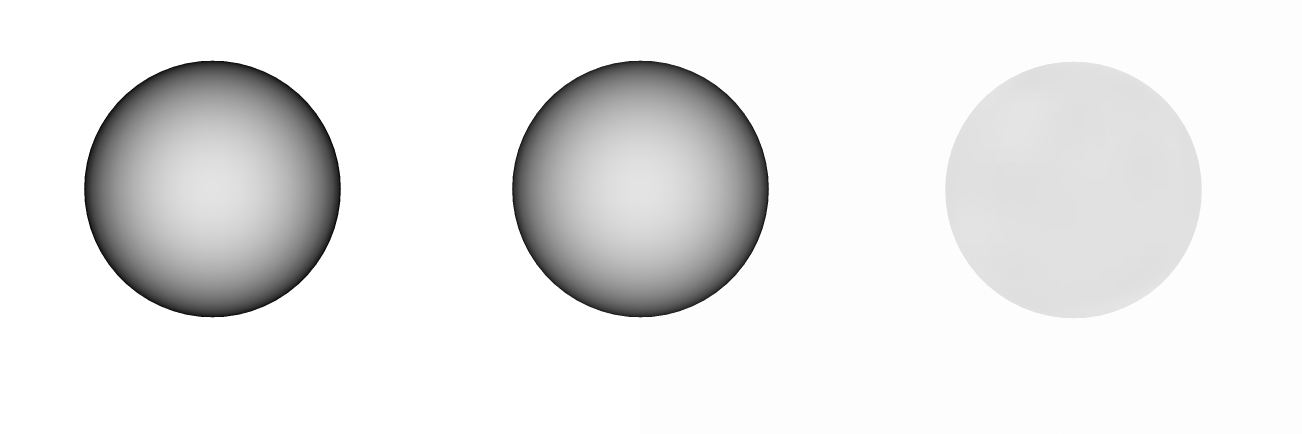

Reflect, max 1 ray, each ball reflects the other but not its surface color which is made by reflections.:

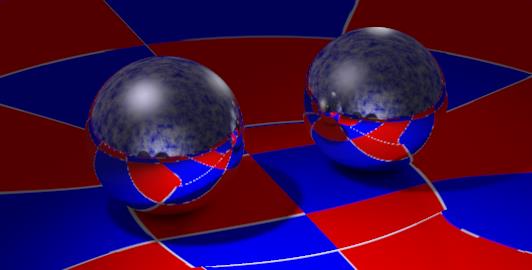

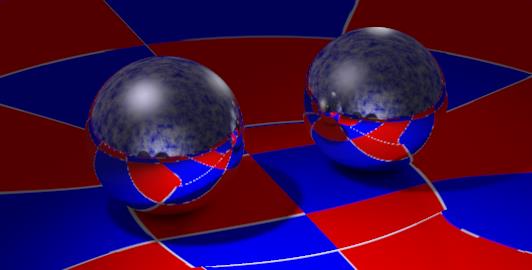

Reflect, max 2 rays, each ball can reflect the others surface but not its own reflection in that:

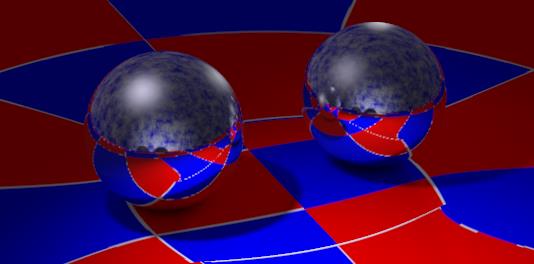

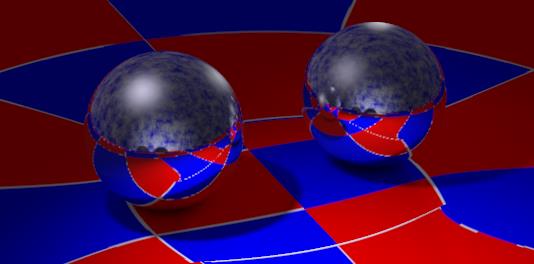

Reflect, max 4 rays, and render times are hardly prolonged:

The spots missing reflections are black because that’s the color set as Background in the Reflect node. I can use any other color, or white with an image attached.

In that case, the Raytrace Bounces slider mixes raytraced and image mapped reflections: for the first (so many) bounces the reflection is raytraced, and from then on it’s mapped.

However, increased slider settings hardly increase render times, reflection is an efficient process. This however might change drastically when transparency is introduced as well. Then light not only reflects from the front, but also passes through the object to reflect from the (inside of the) backside of the object. And that ray will get reflected from the inside of the front side, and so transparency combined with reflection makes the infinite reflections of reflections of … etcetera that slows down rendering to its extremes.

Refraction

First, we’ve got Transparency, which is able to let light pass through a surface. Rays from direct light as well as from objects in the scene. And it does so without raytracing, so it’s not affected at all by any Raytrace Bounces value. But it can’t bend light rays either.

Instead of – or on top of – transparency, Refraction makes light rays bend as well when passing through a surface. And that’s raytracing. But like Reflection, Poser Refraction deals with objects only and does not handle direct light itself. Without transparency, refraction will treat the surface as perfectly transparent for objects and applies bending as required.

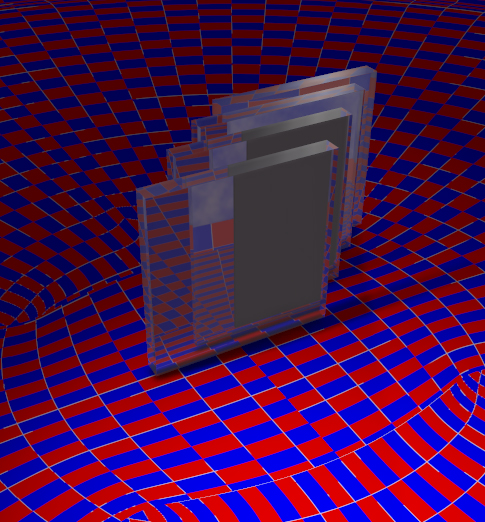

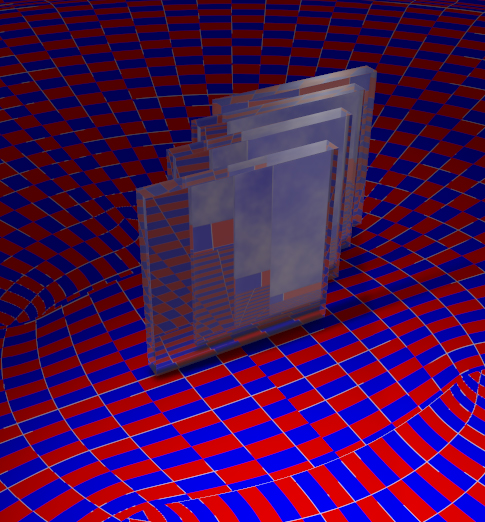

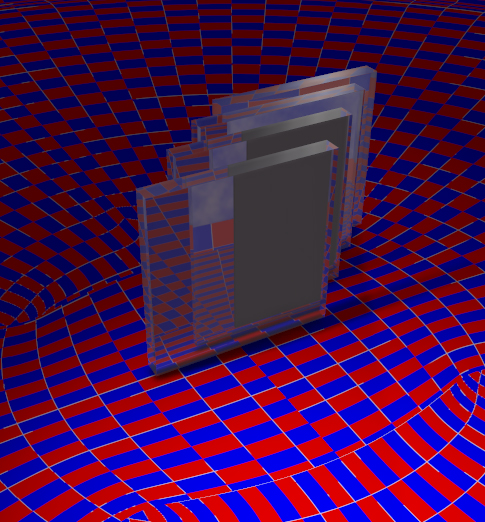

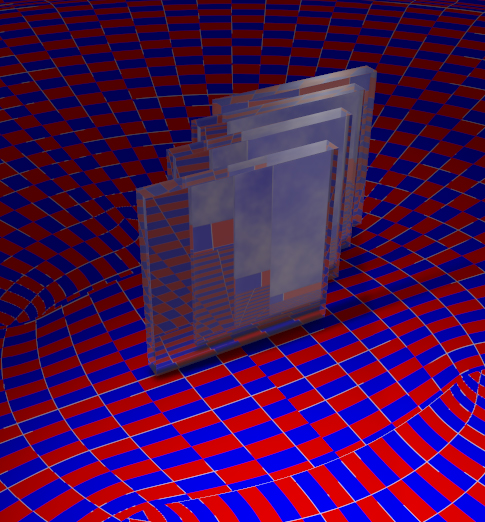

So when objects are placed relative to each other to require refraction of refraction of … in the scene, and the Raytrace Bounces value is reduced, the light stops passing through the surface and might cause artefacts similar to Reflection. It depends on the amount of objects, each of them requires two bounces to let the light pass through, but two objects parallel to each other do not generate an infinite amount of mutual refractions like they can do with reflection. Hence, the Raytrace Bounces value does not need such high values anyway.

The number set is a maximum value, when Poser does not need them it won’t use them, but if the number of bounces for a light ray exceeds this limit, the light ray is killed. This might speed up the rendering while it also might introduce artifacts (black spots) in the result. The tradeoff is mine, but as nature has an infinite number of bounces, the max value is the best when I can afford it.

Left: Raytrace bounces set to 4, while 4 objects require 8 bounces. Right: When the value is increased to 8 or more, all objects and surfaces can be passed.

And like reflection, refraction as such is quite an efficient process. Until transparency kicks in.

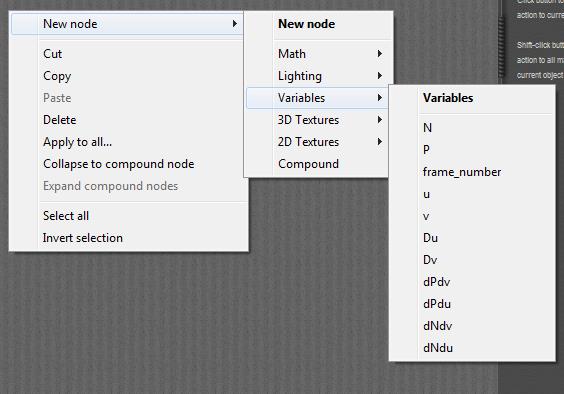

Indirect Lighting

To some extent, InDirect Lighting (IDL) is an application of reflection. Light hitting objects is diffused back into the scene, hitting other objects and so on. At each bounce the ray dies a bit, and after so many bounces it gets killed if it happens to be still around anyway. In open scenes a ray might get lost into open space, but most scenes applying IDL are encapsulated within a dome. Then killing rays really reduces the amount of rays around, and hence reduce the lighting level.

To some extent, InDirect Lighting (IDL) is an application of reflection. Light hitting objects is diffused back into the scene, hitting other objects and so on. At each bounce the ray dies a bit, and after so many bounces it gets killed if it happens to be still around anyway. In open scenes a ray might get lost into open space, but most scenes applying IDL are encapsulated within a dome. Then killing rays really reduces the amount of rays around, and hence reduce the lighting level.

Some scenes do present dark spots under IDL lighting, especially in the corners where walls and ceilings meet. That’s understandable: rays are bouncing around and one needs some luck to get a ray just in such a corner, instead of just bouncing away from the sides near to it. In such cases, killing rays early by a low Raytrace Bounces setting will increase the risk of missing a corner, and the corners will turn dark and splotchy. So an increased Raytrace Bounces value will reduce those artefacts, as it reduces artefacts in reflection itself.

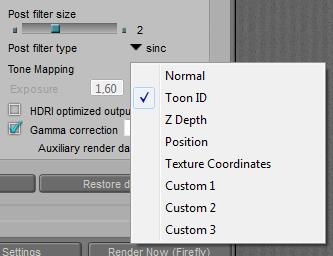

Note that launching the render via the Scripts > partners > Dimension3D > Render Firefly menu gives me the opportunity to discriminate between raytrace and IDL bounce limits. So I can increase the latter without having the burden of large values for the first.

When I’ve also got direct lights in the scene (like a photographer uses a flash when working outdoors in the sun), this increase in IDL lighting levels will change the balance between direct and indirect light, and I might want to correct for that by altering the lighting levels at the sources of it.

Next >

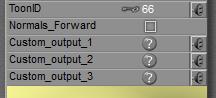

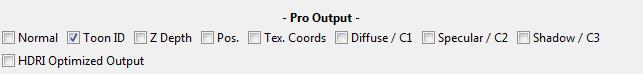

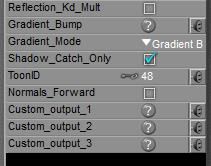

Like the ToonID, checking the Custom 1, 2 and/or 3 Auxiliary render data options in Render Settings enables extra layers in the export of the render result in Photoshop PSD format. This PSD layered export is available in Poser Pro only, and so are the three Custom fields.

Like the ToonID, checking the Custom 1, 2 and/or 3 Auxiliary render data options in Render Settings enables extra layers in the export of the render result in Photoshop PSD format. This PSD layered export is available in Poser Pro only, and so are the three Custom fields.

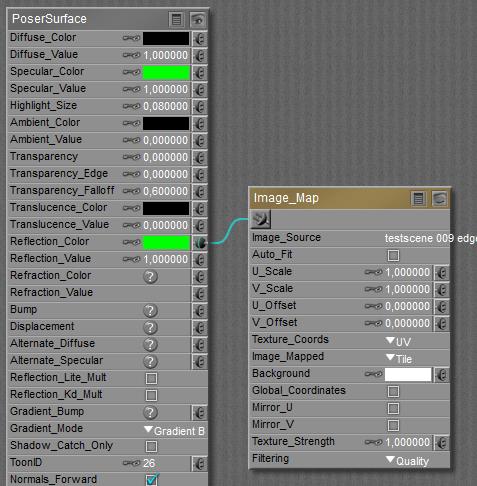

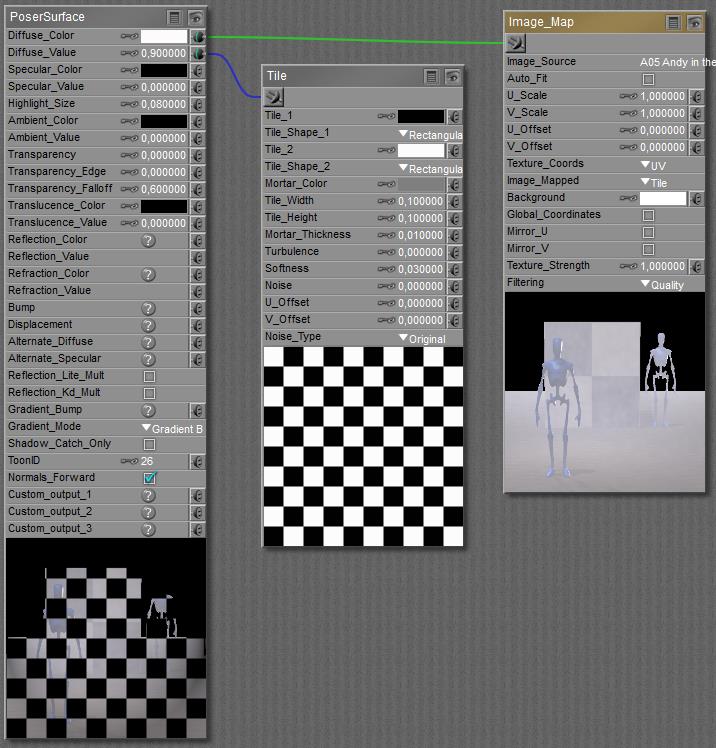

Each PoserSurface has a ToonID value assigned, and one can even make it varying over time (animated) as well as driven by a node construction, like a complex of

Each PoserSurface has a ToonID value assigned, and one can even make it varying over time (animated) as well as driven by a node construction, like a complex of

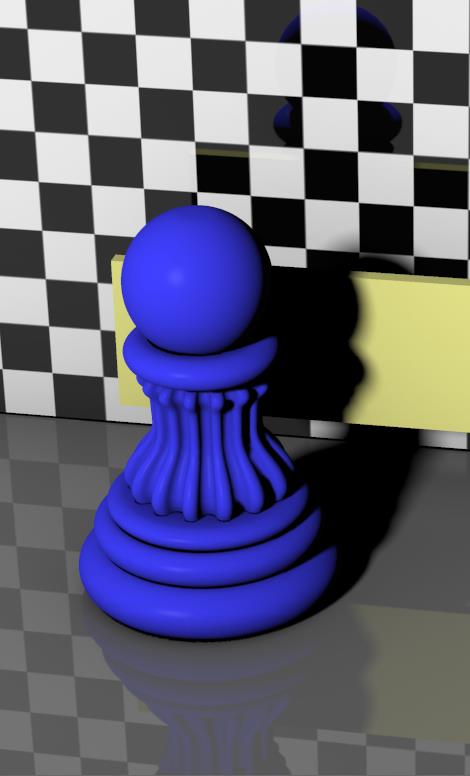

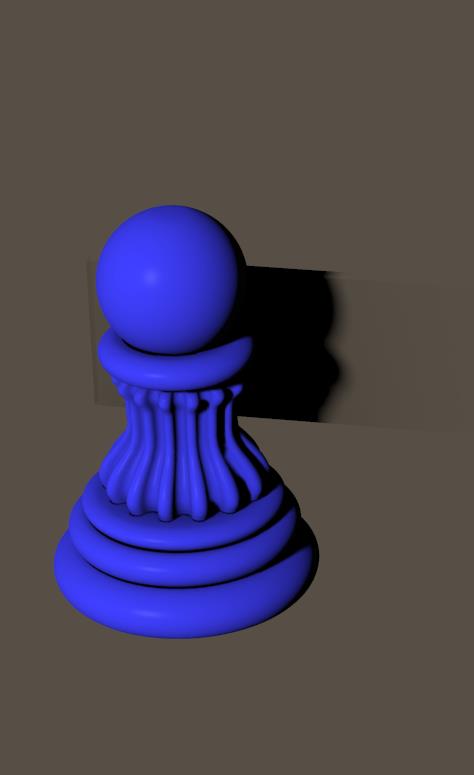

By switching ON the Shadow Catch Only option in a PoserSurface definition the surface will disappear completely, except from the shadows cast from other objects, and from itself as well.

By switching ON the Shadow Catch Only option in a PoserSurface definition the surface will disappear completely, except from the shadows cast from other objects, and from itself as well. =>

=>

To some extent, InDirect Lighting (IDL) is an application of reflection. Light hitting objects is diffused back into the scene, hitting other objects and so on. At each bounce the ray dies a bit, and after so many bounces it gets killed if it happens to be still around anyway. In open scenes a ray might get lost into open space, but most scenes applying IDL are encapsulated within a dome. Then killing rays really reduces the amount of rays around, and hence reduce the lighting level.

To some extent, InDirect Lighting (IDL) is an application of reflection. Light hitting objects is diffused back into the scene, hitting other objects and so on. At each bounce the ray dies a bit, and after so many bounces it gets killed if it happens to be still around anyway. In open scenes a ray might get lost into open space, but most scenes applying IDL are encapsulated within a dome. Then killing rays really reduces the amount of rays around, and hence reduce the lighting level.

.

.

Option ON

Option ON or

or

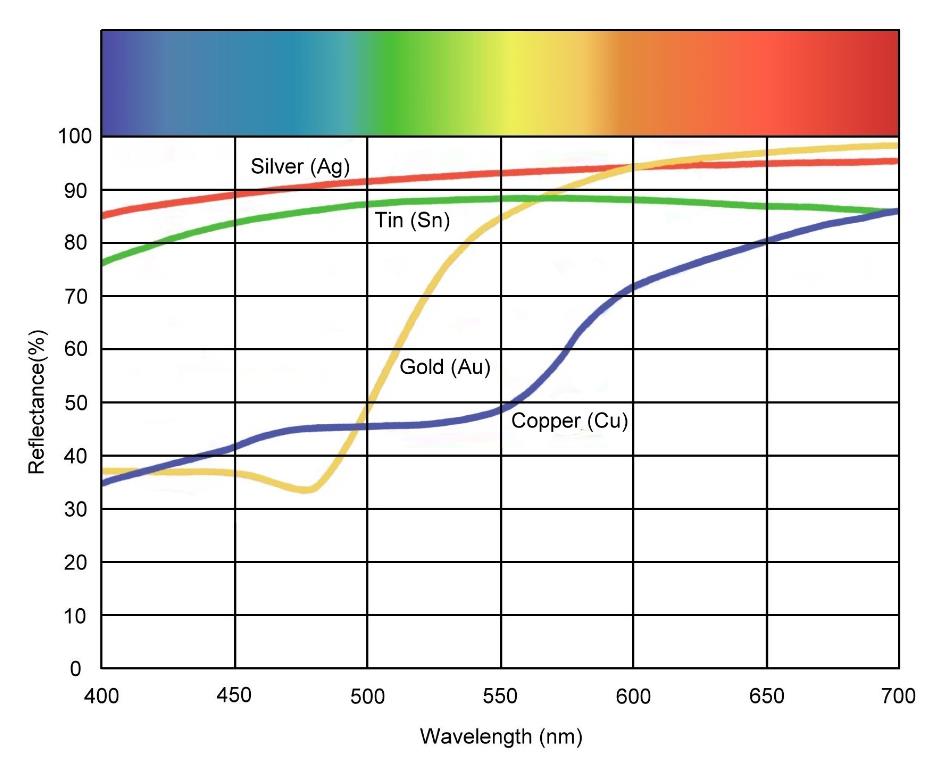

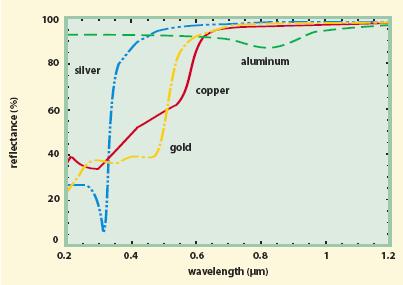

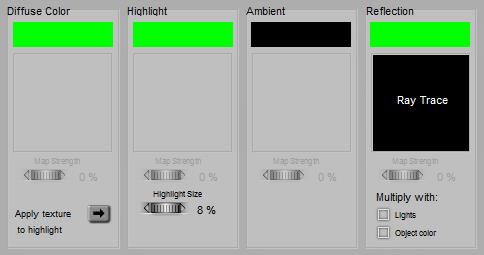

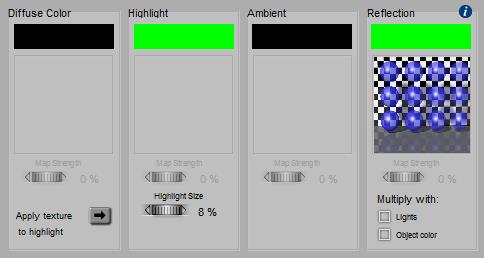

Formally, metals have a high reflectivity and no diffuse. Ensure that there is always something to reflect, then, using Raytrace (above) or an image (below). As there is no Diffuse, do not multiply with it!

Formally, metals have a high reflectivity and no diffuse. Ensure that there is always something to reflect, then, using Raytrace (above) or an image (below). As there is no Diffuse, do not multiply with it! or

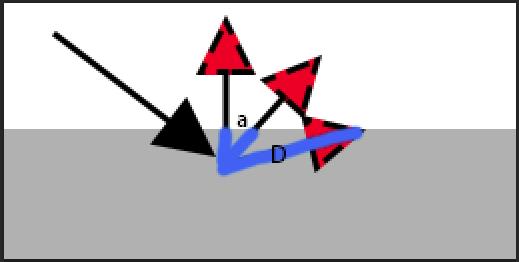

or As this distance D is inversely proportional to the cosine of the exiting angle (D=D0/cos(a)), the intensity of the diffuse light in that direction will be proportional: I = I0 cos(a). This is the angular distribution of outgoing, diffuse light, according to the mathematician J.H. Lambert (about 1750). At perpendicular scattering, angle a=0 so cos(a)=1 and the response is maximal, while at parallel scattering cos(a)=0 and there is no response at all.

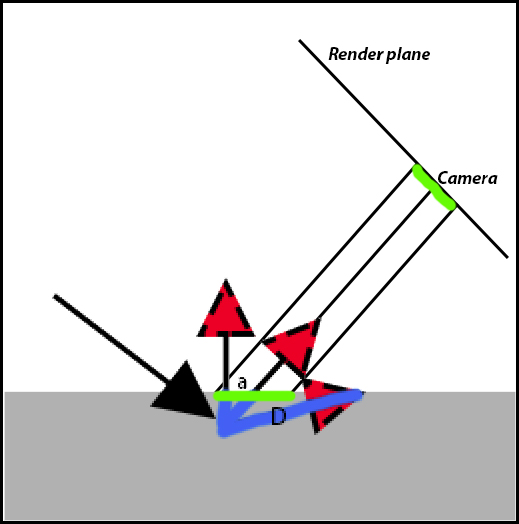

As this distance D is inversely proportional to the cosine of the exiting angle (D=D0/cos(a)), the intensity of the diffuse light in that direction will be proportional: I = I0 cos(a). This is the angular distribution of outgoing, diffuse light, according to the mathematician J.H. Lambert (about 1750). At perpendicular scattering, angle a=0 so cos(a)=1 and the response is maximal, while at parallel scattering cos(a)=0 and there is no response at all. Now, look what will happen to the render result. A specific area on the render plane (say: a pixel), marked green in the illustration, gets its light from an area on the object surface (marked green as well). At skewer angles a between surface normal and camera, this area on the object gets larger: A = A0 / cos(a).

Now, look what will happen to the render result. A specific area on the render plane (say: a pixel), marked green in the illustration, gets its light from an area on the object surface (marked green as well). At skewer angles a between surface normal and camera, this area on the object gets larger: A = A0 / cos(a).

This holds for Ambient and Translucence, and for Reflection and Refraction. Diffuse and Specular are similar, but include a build-in shader as well which manages the distribution of light intensities over the surface. See the

This holds for Ambient and Translucence, and for Reflection and Refraction. Diffuse and Specular are similar, but include a build-in shader as well which manages the distribution of light intensities over the surface. See the