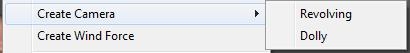

The Poser camera is my eye into the virtual world. There are various kinds of special purpose cameras available, and (via menu Object \ Create Camera) I can add new ones.

The Left / Right, Bottom / Top, Front / Back cameras are “orthogonal” or “2D”. This means that an object keeps its apparent size independent of the distance to the camera, which is bad for believable images but great for aligning objects and body parts. These cameras help me positioning props and figures in the scene.

All other cameras are “perspective” or “3D” ones which mean that objects at a larger distance appear smaller.

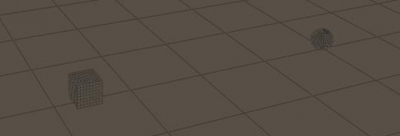

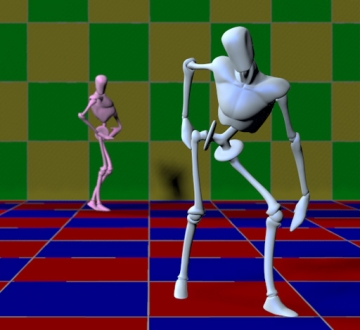

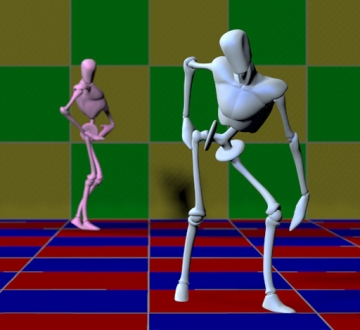

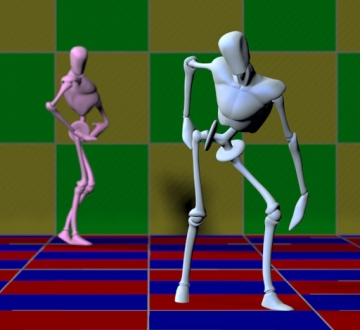

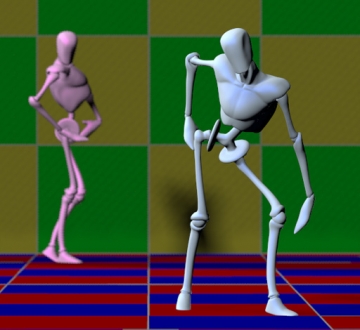

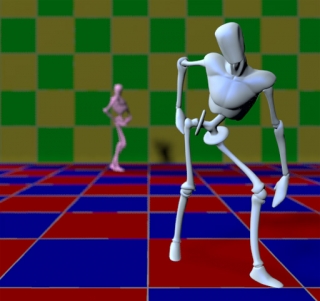

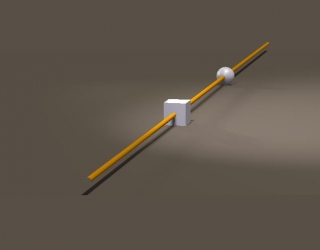

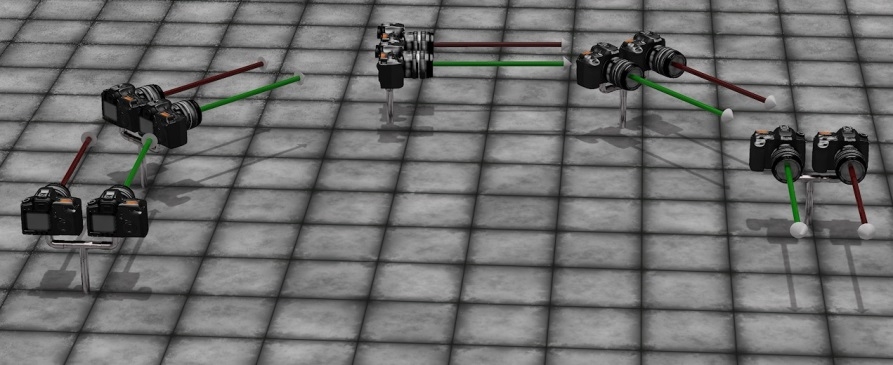

|

Two objects with some distance |

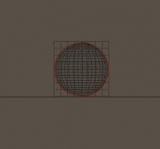

As seen by the Left (2D) camera |

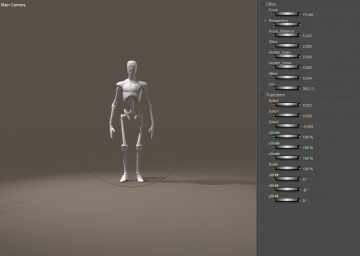

As seen by the Main (3D) camera |

The Posing, Left/Right-hand and Face cameras are constrained to the selected figure, and meant to support the posing of body and hands, facial expressions and the precise positioning of hair and jewelry. These cameras help me creating figures the right way. This implies that when I change figure in the scene, the Posing, Hand or Face camera will show something different immediately.

The Shadow Cams help me aiming spotlights. The camera looks through the lamp into the scene which gives me a fine way positioning those lights. For Infinite lights and Point-lights such an aid is far less relevant.

Camera type

Poser gives me, in each new scene, three cameras to walk through the scene, perform inspections, and take other points of view and everything. These are the Main cam, the Aux-cam (both of the Revolving kind) and the Dolly cam (of the Dolly kind).

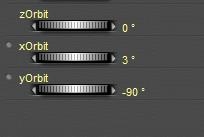

Revolving cameras live in Poser space. They rotate around the center (0,0,0) and around the Poser X,Y,Z axes through that center. The angles are called x-, y- and zOrbit. They move according to their own local axes, so when such a camera looks down, an Y-translation makes it move sideways, not up or down. More precise: the camera origin rotates as stated and the camera itself translates against that origin. This is very satisfying from a computational point of view, but very confusing for us, humble human users.

Dolly cameras live in their own space, rotate around their own center and their own axes, like any other regular object.

Roll means rotating around the forward axes, like a plane taking a turn.

Pitch means rotating around the local left-right axes, making the nose of a ship (or plane) going up and down.

Yaw means rotating around the local up-down axis, which is what makes people seasick. They move according to the Poser X,Y and Z axes so if I alter the DollyY value they just move up or down, whatever they’re looking at.

Main and Aux represent nearby and away director overview cams, fixed to the studio. The photographers’ camera, moving through the scene, even animated maybe, and shooting at various angles at will, is best represented by a Dolly camera. So when I create a new, I choose the Dolly kind. Their transform parameters (move, rotate) are easier to understand and especially their animation curves are easier to interpret.

To add some artistic words on camera angle:

- * I keep the horizon straight, especially in landscapes, unless I’ve good artistic and dramatic reasons not to. The reason is that my audience looking at my image will have a problem identifying themselves with the photographer when they have to twist their neck. In Poser camera terms: don’t Roll.

- * I shoot straight, in Poser camera terms: I don’t Pitch either. This is interesting because most people tend to take pictures while standing upright and have the camera angle being determined by the position and size of the object. Animals, kids and flowers tend to be shot downwards while basketball players, flags and church bells tend to be shot upwards. So boring, so predictable: we see things in that perspective every day.

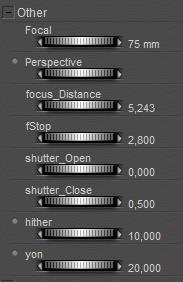

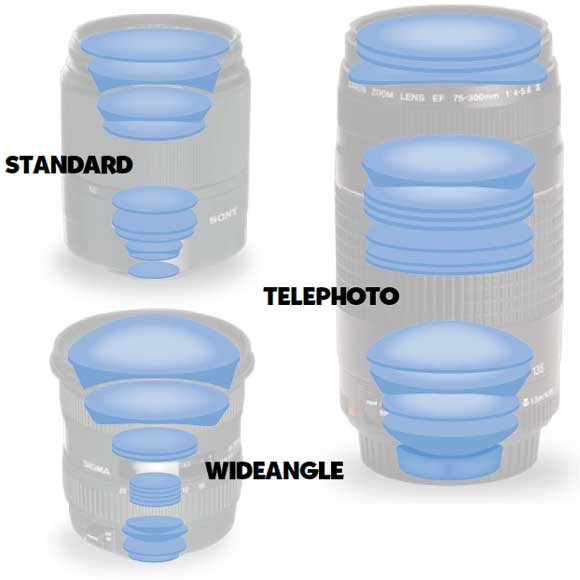

Focal length

Now let me put the camera at some distance of the scene or the subject. Depending on what I want to accomplish in my image, I have to zoom in or out by adjusting the focal length. The “normal” focal length for modern digital consumer cameras is 35mm, for analog cameras it was 50mm, and for current Hasselblads it’s 80mm. So, 50mm is considered wide angle for Hasselblad, normal for outdated analog and mild zoom for consumer digital. Poser follows the route of consumer digital. The 55mm initial setting for the Main camera is good for groups and studio work but needs zooming for portraying. The Dolly camera initially reads 35mm, the Aux camera reads 25mm which fits to its overviewing role. New cameras are set to 25mm initially and do need adjustment.

Perhaps you’ve noticed that in real life the modern consumer digital cameras are quite smaller, and especially quite thinner, than the older analog ones. You may know – otherwise: have a look at the hasselblad.com – that pro cameras are bigger. And yes indeed, there is a simple proportional relationship between camera size and its normal focal length. Poser supports this relationship to some extend: take the Main camera, and increase the Scale to 150%. You will see it is zooming out, while keeping the same value for its focal length.

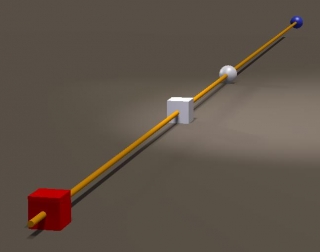

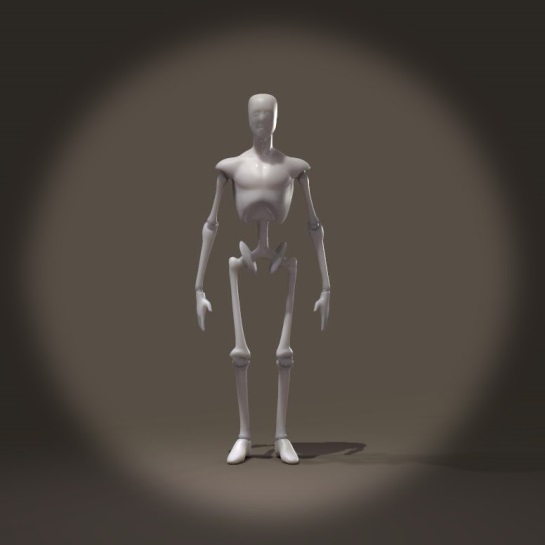

|

Scale 100%, F=75mm |

Scale 150%, F=75mm |

A larger camera needs a larger focal length to establish the same amount of zoom. But Poser is cheating a bit, which can be seen when I use the Aux camera to watch the behavior of the Main Cam. When scaling up the Main cam, it not only grows but it also moves back from the scene, while keeping its DollyX,Y,Z intact.

This is because a Poser Main or Aux camera is the whole thing from 0,0,0 to the view plane capturing the image, and it’s that whole thing which is scaling.

A camera which moves back while the DollyX,Y,Z values remain intact should ring a bell: apparently things are measured in “camera units” which are scaling as well. And indeed: the Focal Distance changes too. Try it:

- Just Andy, just the Main cam, and you’ll find Andy at 11,7 (mtr).

- Scale the Main cam to 150%, and you’ll find Andy’s Focal Distance at 7,8 (mtr) = 11,7 / 150%.

So while the camera retracts the focal distance DEcreases. Sometimes, Poser is black magic.

Another magical thing: some Poser cameras can scale, others cannot. Main, Aux, Dolly and even Posing cams can scale, but L/R-hand and all user added cams cannot. To some extent, this is a good thing because from the above we learn to watch camera scale as it might present more problems and unexpected results than is does any good. Since I take the habit of shooting my final renders with my own cam, instead of a build in Poser one, I don’t run this risk.

On top of that, cameras show a Focal and a Perspective parameter. Actually, the orthogonal Left/Right etc cameras show a Perspective setting only and go berserk when I try to change it. Other cameras, like the Dolly cam, show the Focal parameter only. Other cams, user added ones included, show both, and both parameters consistently show the same value. So, what’s up?

Perspective

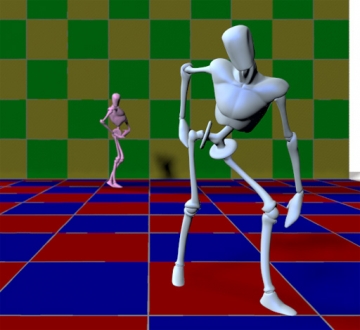

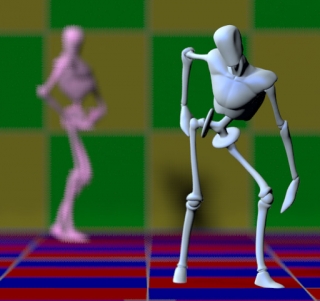

Have a look at this simple scene, where I enlarge the focal length while walking backwards so Blue Andy remains about the same size in the shot.

20mm (scale 100% fStop=5.6):

As you can see, zooming in not only gives a smaller (narrowed) portion of the scene, it also brings the background forward. In other words: zooming flattens the image.

Actually, while zooming in I’ve got to walk backwards quite a lot, which might be undesirable in various cases. This is where the Perspective parameter comes into play. When changing the Perspective from 55 to 120 (218%), I will notice a change in camera size (scale 100 => 218%, zooming out) and a drop in the DollyX,Y,Z values (of 1/ 218%). The scaling enlarges the “camera distance unit” so this change in Dolly values actually makes me stay in the same position in the scene. At the same time the focal length goes up, zooming in. In order to keep Blue Andy about the same size in the image I still have to walk backwards, but far less. Simply, if I use the old 100% DollyXYZ numbers, I’m standing in the right place, Blue Andy has its original size but the perspective of the scene is that of the 120mm zoom lens.

Again: when I change the Perspective (instead of the focal length dial) and I keep the DollyZ etc values intact, then the foreground of the scene remains the same while the background comes forward, while I slowly moves backward myself, and so on. Even the focal distance can keep its value, as it’s measured in the same “camera distance units”.

If you keep standing in place and take the DollyZ as the Perspective dials presents to you, don’t forget to reduce the focal distance (in this example: with 1/218%, or from say 6 to say 3). This, for whatever reason, is not done by the Perspective dial.

Note: some Poser cameras (e.g. L/Rhand cam) have no Scale parameter, but I might change Perspective. This only changes focal length, so I just use that one instead. Scale remains at 100%. Some Poser cameras (e.g. Dolly cam) have no Perspective parameter, but I might change Scale by hand (and DollyX,Y). Some Poser cameras lack both, so I cannot use this Perspective feature at all. This is the case in user added cameras. When I use one of those for my final imaging I don’t have to bother on Scaling and Perspective tricks and troubles. I just cannot turn my digital consumer cam into a Hasselblad by spinning a dial.

Next >>

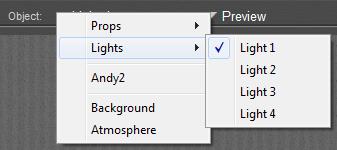

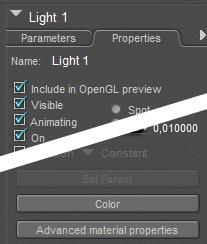

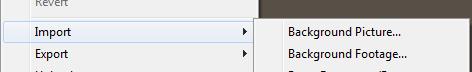

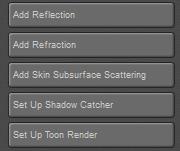

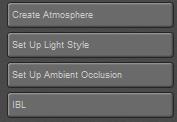

Next to that, there are some additional ways into Material Room, for the ‘material properties’ of Lights, Atmospheres, Backgrounds, and for some specific surface properties:

Next to that, there are some additional ways into Material Room, for the ‘material properties’ of Lights, Atmospheres, Backgrounds, and for some specific surface properties:

and with the Shadow Color picker just right/below the Document window one affects the

and with the Shadow Color picker just right/below the Document window one affects the

The [Create Atmosphere] button however affects the

The [Create Atmosphere] button however affects the